Add InstantSearch and Autocomplete to your search experience in just 5 minutes

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

A good starting point for building a comprehensive search experience is a straightforward app template. When crafting your application’s ...

Senior Product Manager

The inviting ecommerce website template that balances bright colors with plenty of white space. The stylized fonts for the headers ...

Search and Discovery writer

Imagine an online shopping experience designed to reflect your unique consumer needs and preferences — a digital world shaped completely around ...

Senior Digital Marketing Manager, SEO

Winter is here for those in the northern hemisphere, with thoughts drifting toward cozy blankets and mulled wine. But before ...

Sr. Developer Relations Engineer

What if there were a way to persuade shoppers who find your ecommerce site, ultimately making it to a product ...

Senior Digital Marketing Manager, SEO

This year a bunch of our engineers from our Sydney office attended GopherCon AU at University of Technology, Sydney, in ...

David Howden &

James Kozianski

Second only to personalization, conversational commerce has been a hot topic of conversation (pun intended) amongst retailers for the better ...

Principal, Klein4Retail

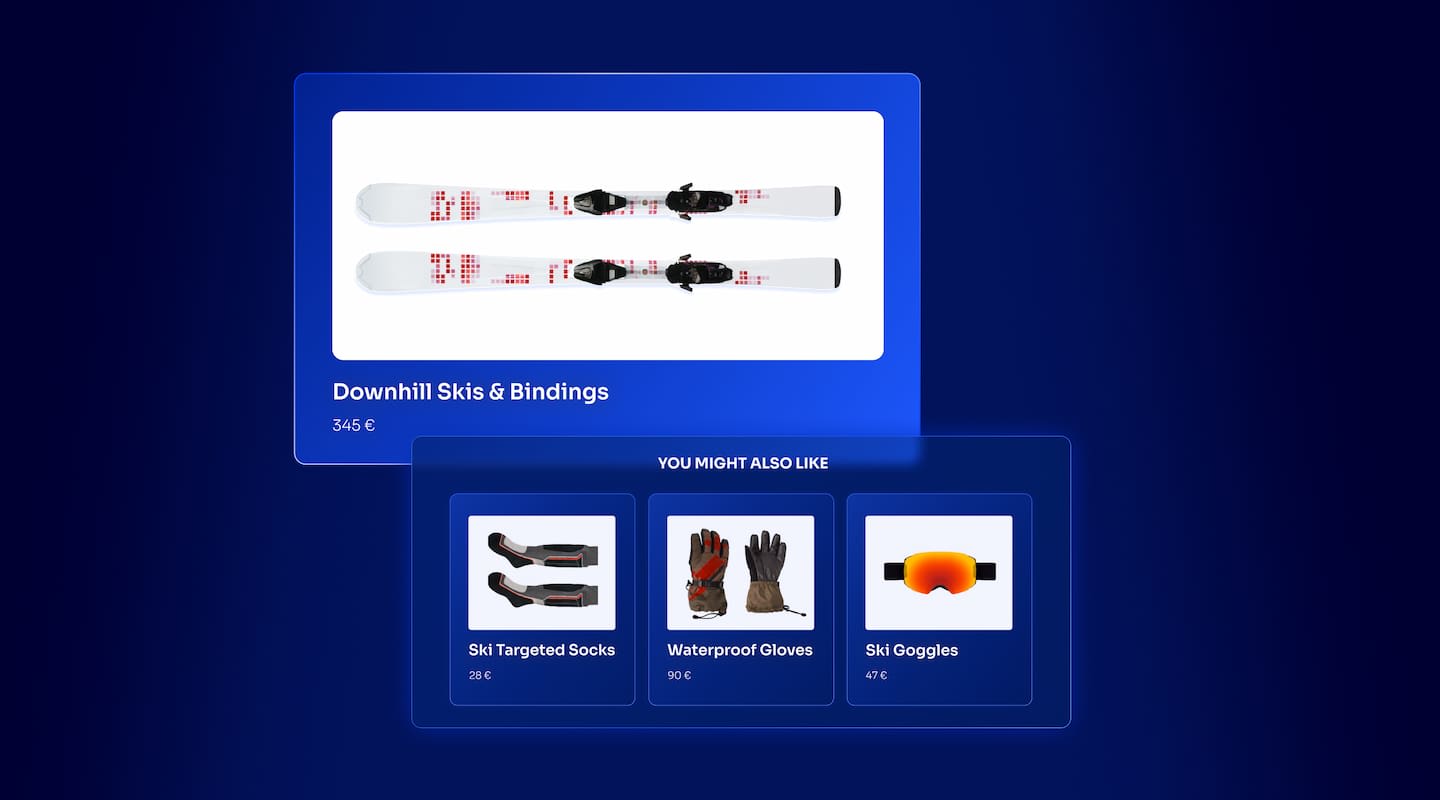

Algolia’s Recommend complements site search and discovery. As customers browse or search your site, dynamic recommendations encourage customers to ...

Frontend Engineer

Winter is coming, along with a bunch of houseguests. You want to replace your battered old sofa — after all, the ...

Search and Discovery writer

Search is a very complex problem Search is a complex problem that is hard to customize to a particular use ...

Co-founder & former CTO at Algolia

2%. That’s the average conversion rate for an online store. Unless you’re performing at Amazon’s promoted products ...

Senior Digital Marketing Manager, SEO

What’s a vector database? And how different is it than a regular-old traditional relational database? If you’re ...

Search and Discovery writer

How do you measure the success of a new feature? How do you test the impact? There are different ways ...

Senior Software Engineer

Algolia's advanced search capabilities pair seamlessly with iOS or Android Apps when using FlutterFlow. App development and search design ...

Sr. Developer Relations Engineer

In the midst of the Black Friday shopping frenzy, Algolia soared to new heights, setting new records and delivering an ...

Chief Executive Officer and Board Member at Algolia

When was your last online shopping trip, and how did it go? For consumers, it’s becoming arguably tougher to ...

Senior Digital Marketing Manager, SEO

Have you put your blood, sweat, and tears into perfecting your online store, only to see your conversion rates stuck ...

Senior Digital Marketing Manager, SEO

“Hello, how can I help you today?” This has to be the most tired, but nevertheless tried-and-true ...

Search and Discovery writer

Imagine you’re visiting an online art gallery and a specific painting catches your eye. You’d like to find similar artwork, but you’re not exactly sure why that particular painting’s aesthetic vibe speaks to you. If you search by the standard metadata language, your results will vary widely.

You’ll probably first look for paintings by the same artist, but what if this was his only painting or was inconsistent with his usual style. You could search by the year it was created, browse other paintings in the gallery, or look for paintings with similar colors, but the results would likely be spread out among hundreds of different artists, each with their own unique style.

If you lack the language to properly describe what you’re looking for, using the most common metadata will likely lead to unrelated recommendations that do not convey that vibe that you’re looking for.

This content was originally presented at Algolia DevCon 2023.

So, how do you label a vibe?

Vibes are hard to put into words. It’s when you can see when a product fits your needs at a glance. But how do you get your app to recognize this? Algolia Recommend’s new model, LookingSimilar, unlocks the power to suggest great looking items from your catalog based on an image. LookingSimilar gives your app the gift of sight!

What does it take to generate solid image recommendations?

The thing with search is that a user needs to have at least a vague description of what you are searching for. Discovery is also a huge part of your user’s search journey. Users often explore your inventory through navigation and recommendations until they find that perfect item that vibes with them. Recommendations are an ancillary tool that expands on the search experience to surface inventory that is contextually relevant to your users.

Did the user add a low-margin item to their cart? You can recommend frequently bought together items that are likely to interest your user.

Did the user’s search for a product that is currently out of stock? You can recommend similar products.

But, the quality of these recommendations depends on the quality of the data and how well it is labeled.

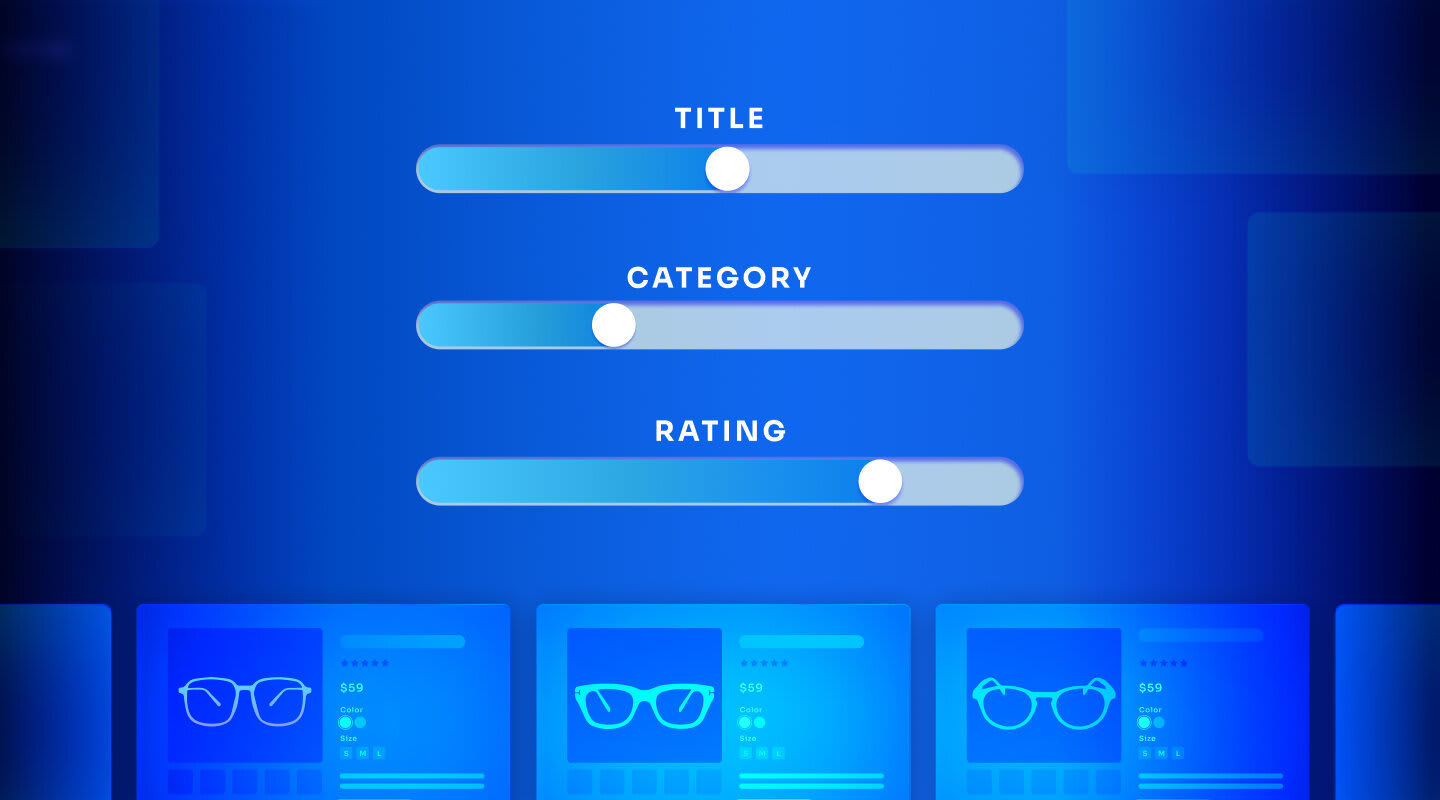

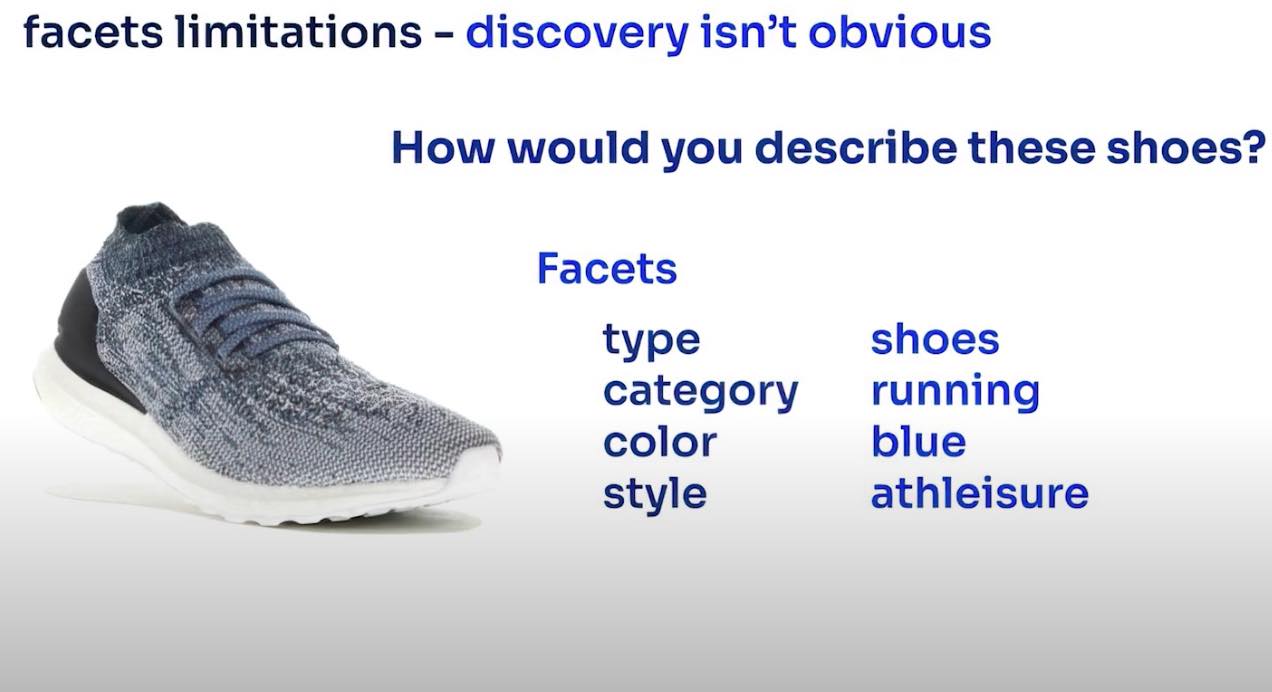

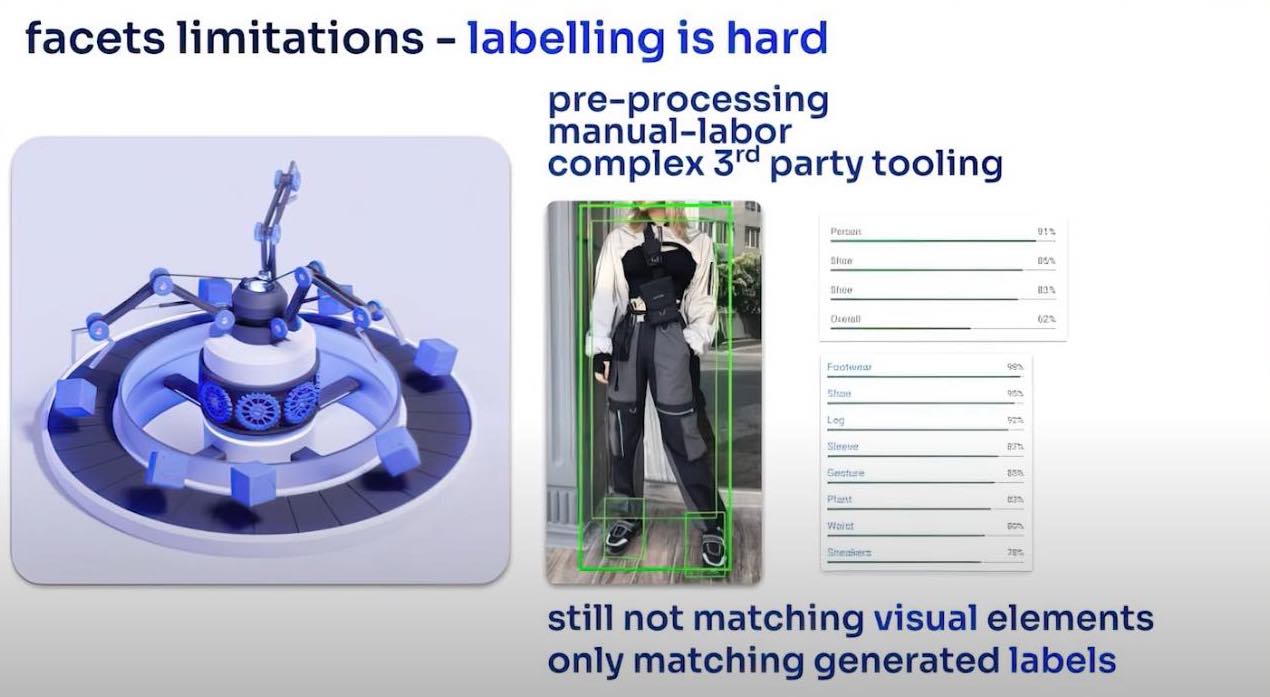

Labels in facets show limitations when both ends of the equation don’t share a similar language and vocabulary.

For example, how would you describe the shoes in the image below?

Traditionally, we would use facets such as title, description, colors, and other metadata to suggest related or complementary items. This works great if the data is well-labeled and the facets reflect what’s important to the user.

Take a look at the facets for this product.

Are these shoes? Sneakers? Or is there a different word that you prefer?

What category are they? Are they running shoes? Gym shoes? Are they slip-ons?

What color are they? Blue, beige, or gray? Does color only refer to the fabric? What about the white sole or the black heel?

What style are these shoes? Athleisure, casual, or sporty?

If you and your customers aren’t both using the same language to describe these shoes, these facet limitations will lead to problems with highlighting your inventory which will eventually cost you sales.

Labeling is hard work. Traditionally labeling requires you to pre-process your data before you can properly display results to your users. For example, in your marketplace this might be done manually by your sellers. This method produces long forms, inconsistent data, and a lot of friction.

You could automate the process by generating labels programmatically through computer vision software – usually offered as an expensive and complicated extra service by big cloud providers.

For example, look at the outfit in the image below.

A leading computer vision software from a major cloud provider can detect objects fairly well, but they are too generic to use in recommendations. You could label those objects, but it still would not be very helpful.

If the labeling was more advanced, the approach might seem promising. Yet, this also has limitations as the software is still not matching the actual images but instead translating the visual elements into textual labels and then generating recommendations based on those translated textual labels.

What if you could extract recommendations and relationships automatically from your images?

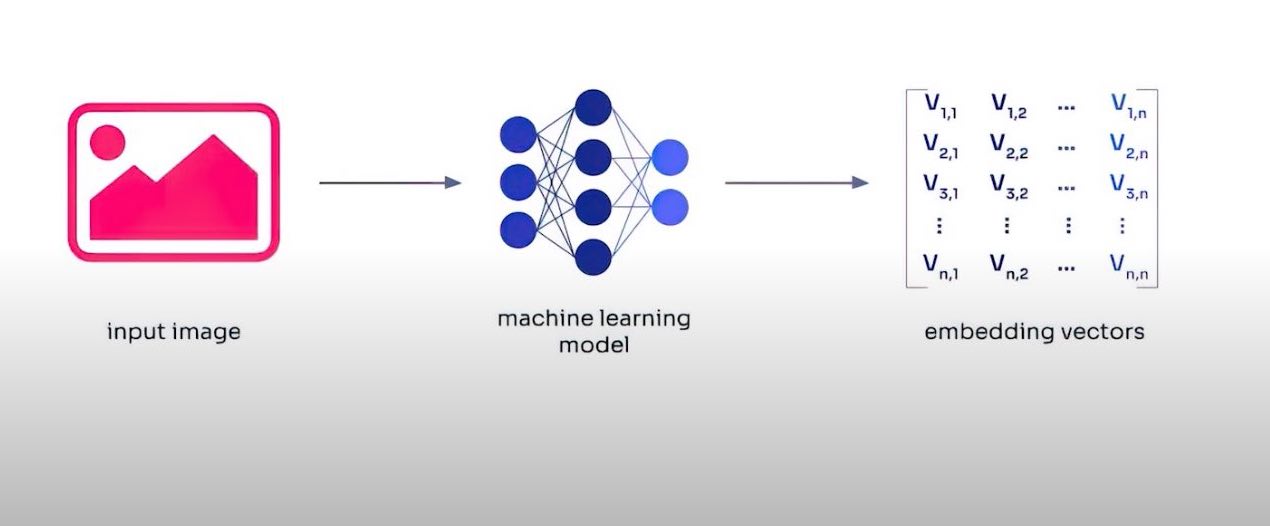

Algolia built a new machine learning engine that uses neural hashes to be highly efficient and cost effective. This unlocks a whole new world of possibilities. This new technology has many applications including powering your visual recommendation use case.

How does it work?

We start with your raw input image and run it through our machine learning model. The model outputs the corresponding embedding vectors that will be used internally to recommend similar images. This graphical approach with vectors produces some impressive results as demonstrated in the examples below.

For example, imagine you are searching for an apartment and you come across the image below. It’s an awesome looking bathroom with a certain vibe to it. Maybe it’s the green tile, the glass open shower, or a combination of elements that speak to you in ways that you can’t describe.

What if this apartment was rented before you had the chance to look at it? An image vector-based recommendation model can suggest relevant alternatives that share that same vibe without the need to describe exactly what it was.

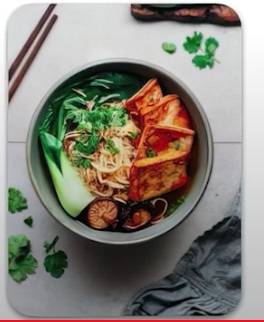

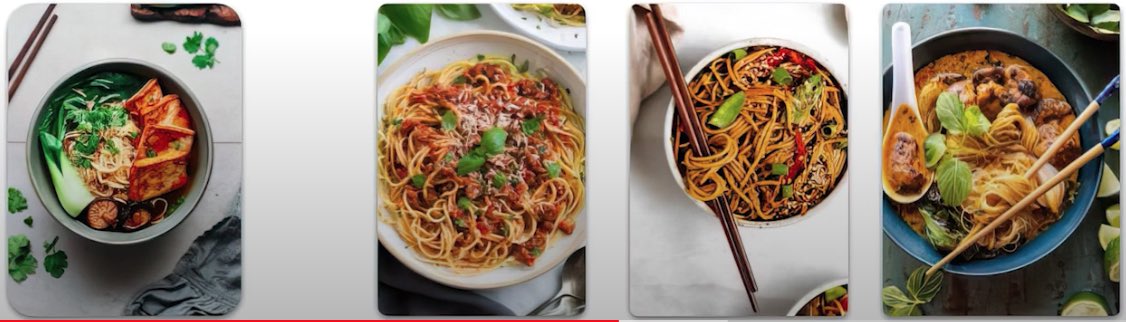

Another great example is if you were looking for a restaurant for dinner and you come across the image of a delicious looking dish below.

But unfortunately that restaurant is closed for the night. An image vector-based recommendation model can find similar delicious dishes that may not even be from the same region or cuisine.

This unlocks opportunities that traditional metadata based systems could never achieve and expands the horizon to escape the algorithmic bubble that is limited by labeling.

Unfortunately, it’s not perfect. Image based recommendations also show certain limitations.

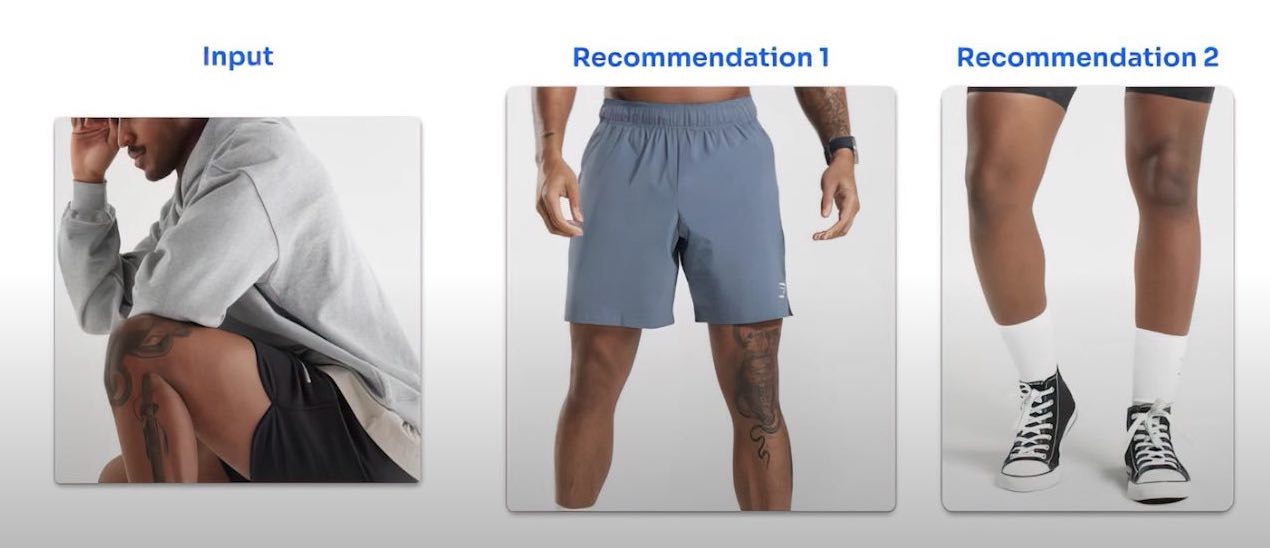

In the example below, the recommendations from the input of a relaxed gym outfit with a cozy hoodie and black shorts are not relevant to our users.

It thinks these are good visual matches, but it misses the point. It may have latched on to the tattoo or that all these images display legs. A bad metadata match won’t return relevant recommendations.

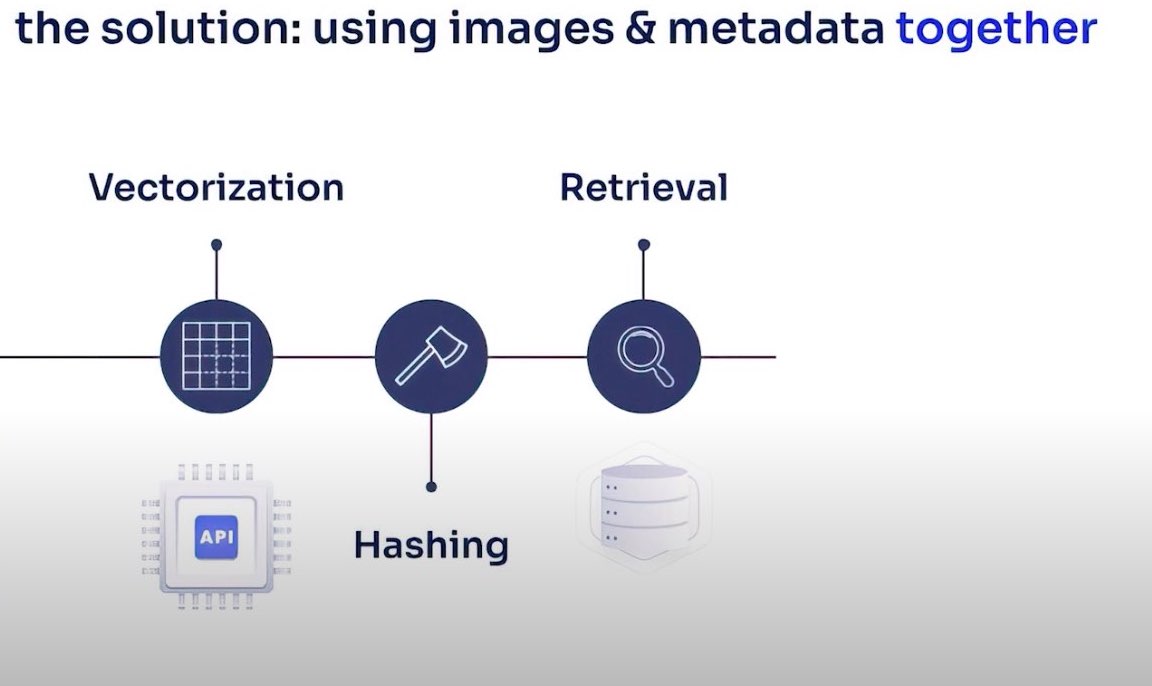

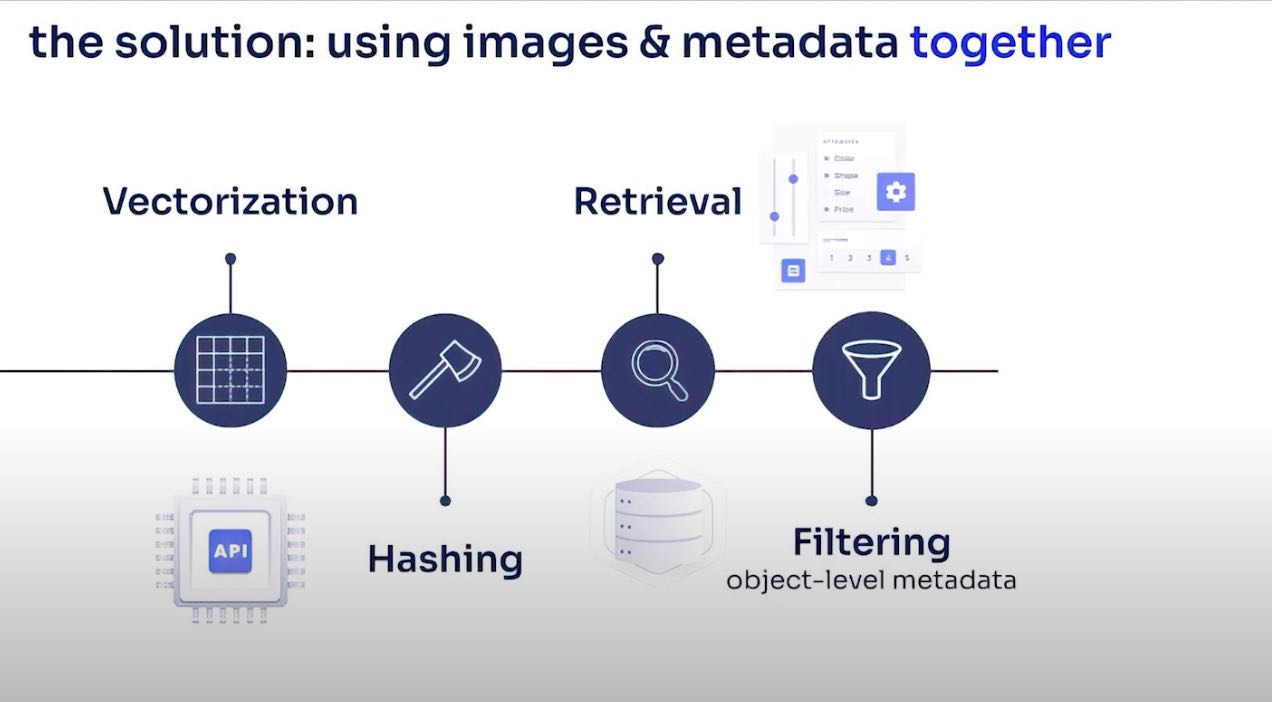

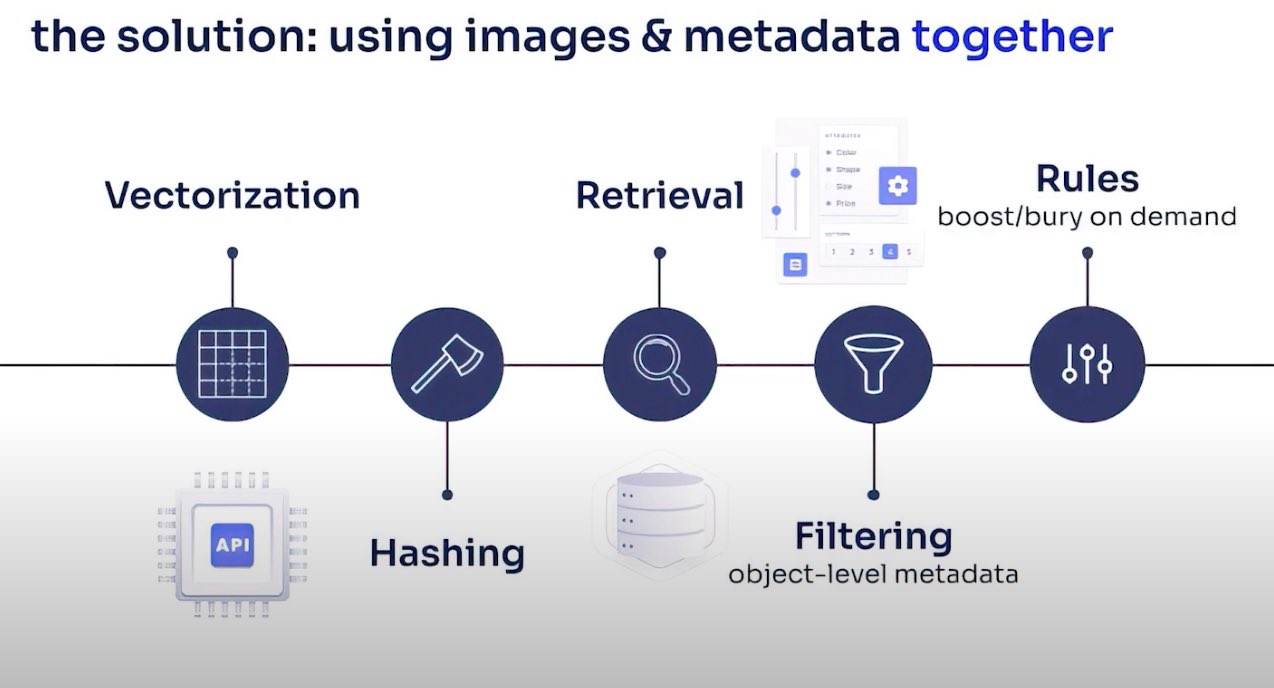

To fix this, Algolia layers a number of systems to get the best of both worlds – using images and metadata together to build a more efficient system.

We start by vectorizing the images that you send through Algolia APIs. Then internally hash these into vectors and store them into our globally distributed databases for retrieval. If we stop at this stage, we would encounter the same limitations of purely visuals recommendations like in the gym outfit example.

By layering object level metadata filtering on top of these recommendations, we filter out recommendations that are not relevant and don’t match the desired category.

Then with our powerful rules engine, we can dynamically boost an item that is on sale and downrank an item that is running out of stock.

Putting all of this together results in a great merchandising experience where you only have to focus on your business needs and rules.

Now that you understand how it works, here is a bit of an absurd example. You’ve probably heard the idiom, “don’t judge a book by its cover,” but let’s try it out and explore book recommendations through cover similarities.

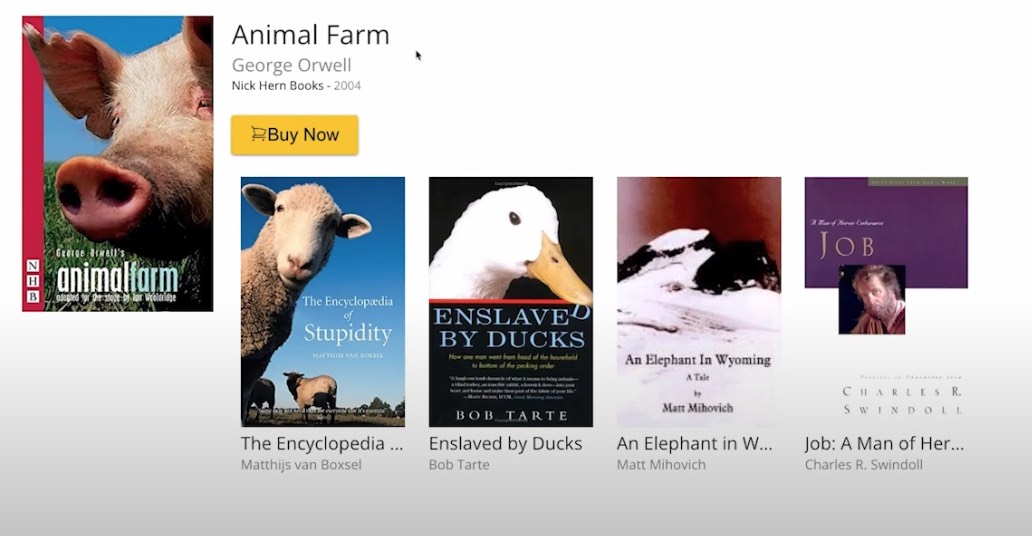

Let’s search for books written by George Orwell, for example Animal Farm. The recommendation model picked up on visual clues to recommend other books. You can see in the screenshot below that it picked up on the animal theme to suggest other books that wouldn’t have traditionally been recommended together.

This shows an interesting aspect of this technology. We could continue to navigate through the new generated recommendations to see how far we can get away from the initial book.

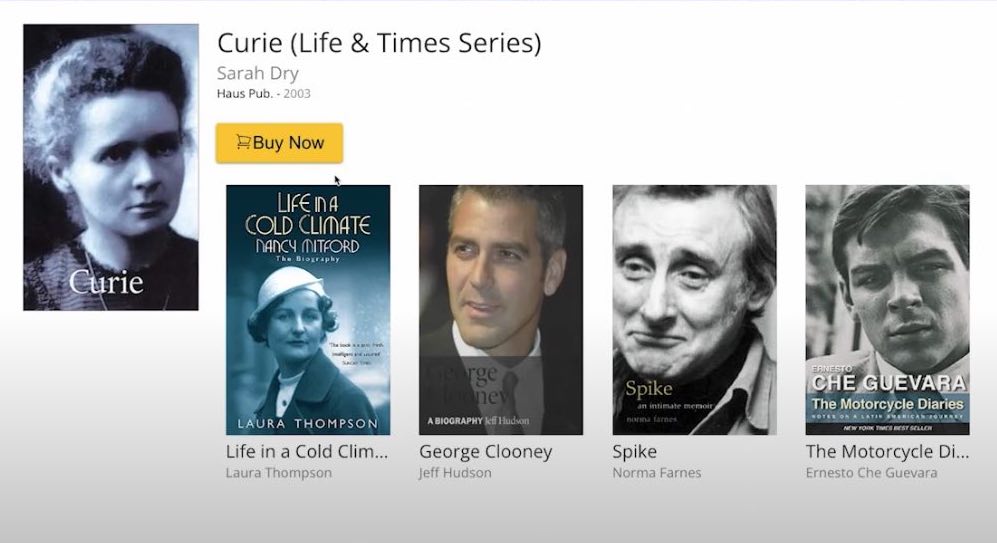

If you use the model to search for the biography of Marie Curie, you can see that it picked up on portraits and faces to recommend other books that wouldn’t have traditionally been bundled together.

Using the machine learning model to discover visual similarities, you can unlock interesting image recommendations to surface these items for your users.

These are just a few examples of what you can build with this new technology. Hopefully, it will inspire you to build something even more innovative.

To learn how Algolia can help you generate image-based recommendations, contact an Algolia AI search expert today.

Algolia Recommend relies on supervised machine learning models that are trained on your product data and user interactions to display recommendations on your website. Recommendations encourage users to expand their search and browse more broadly. Users can jump to similar or complementary items if they don’t find a precise match.

LookingSimilar is a visual analysis model that uses machine learning to generate image-based recommendations. This exciting new technology is currently in development by Algolia.

We look forward to seeing what you‘ll build next!

Powered by Algolia Recommend